Tutorial: Covid Vaccination Card Verification -- Part 1 of 2

Part 1: Image Alignment

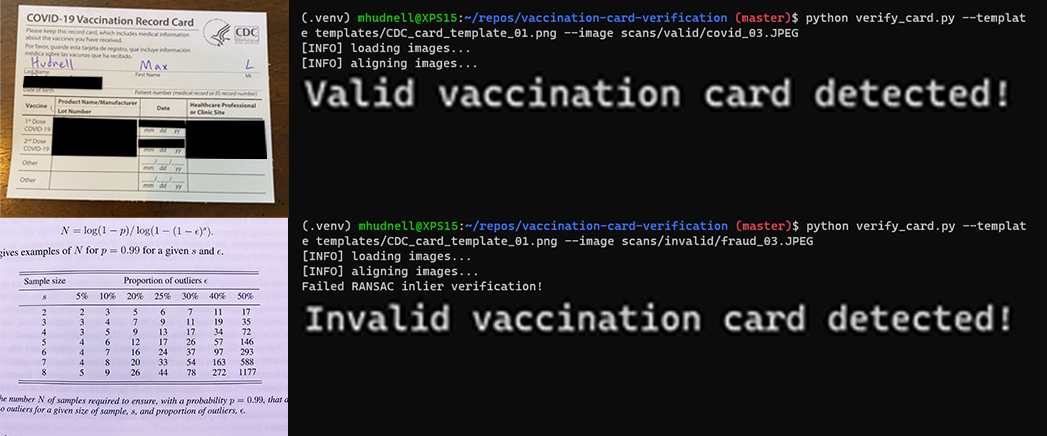

Example usage.

Example usage.Now that over half of all Americans have received at least one COVID vaccination shot, there has been discussion about requiring proof of vaccination for admittance into places like hotels, airports, and cruises. The idea of vaccine passports has been proposed and may be coming in the near future.

However, for the time being, the only thing one can show as any sort of proof they’ve been vaccinated is their CDC-issued COVID-19 vaccination card. In this blog post, we share a method for detecting these cards and providing automatic verification.

Our program, implemented in python, takes an image as input and outputs whether a COVID vaccination card is present in the image. We encourage you to visit our GitHub repository and try it out for yourself, or even utilize it in your own verification pipeline.

Automated verification is a multi-step process that performs image alignment, optical character recognition (OCR), template matching, and more. We provide a full tutorial detailing our implementation below.

It is important to note that this should not be used in critical use cases, as the COVID vaccination card can easily be faked.

Tutorial Part 1: Image Alignment

The first step in the verification process is to align the input image to a template image, which in this case is a blank COVID vaccination card. Image alignment, also known as image registration, is a well-studied problem in computer vision. We utilize and build on top of the document registration implementation by Adrian Rosebrock at PyImageSearch. I recommend going through his tutorial for the full details, but below we briefly go over the highlights of the procedure.

In order to align the images, we must determine the homography matrix, which provides us with a mapping between the input scan and the template image. To estimate a 2D homography matrix, we will need at least four keypoint matches between the two images¹ (preferably many more than four, since there is a high probability some of the matches will be incorrect).

Before extracting keypoints, we first convert both the template and scan image to grayscale. We do this because the vaccination card consists of black ink printed on white paper, so we can safely assume any color information is noise caused by imperfect lighting/printing.

imageGray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

templateGray = cv2.cvtColor(template, cv2.COLOR_BGR2GRAY)

For feature extraction, we utilize the ORB feature detector, which as the title of the ORB paper states, is “An efficient alternative to SIFT or SURF"². ORB is also free to use, while SIFT and SURF are patented!

orb = cv2.ORB_create(maxFeatures)

(kpsA, descsA) = orb.detectAndCompute(imageGray, None)

(kpsB, descsB) = orb.detectAndCompute(templateGray, None)

We then use the OpenCV brute-force keypoint matcher, with hamming distance as the similarity measurement. This provides us with keypoint matches between the two images.

method = cv2.DESCRIPTOR_MATCHER_BRUTEFORCE_HAMMING

matcher = cv2.DescriptorMatcher_create(method)

matches = matcher.match(descsA, descsB, None)

This produces a large number of matches, the majority of which are often incorrect. To counter this, we then sort the matches by their similarity score and keep only the top 20% of matches. We then reorganize the matches into two separate lists, ptsA and ptsB.

matches = sorted(matches, key=lambda x:x.distance)

ptsA = np.zeros((len(matches), 2), dtype="float")

ptsB = np.zeros((len(matches), 2), dtype="float")

keep = int(len(matches) * keepPercent)

matches = matches[:keep]

for (i, m) in enumerate(matches):

ptsA[i] = kpsA[m.queryIdx].pt

ptsB[i] = kpsB[m.trainIdx].pt

Notice that there are still many incorrect matches, even though we’ve discarded the least confident matches. The reason this isn’t a huge problem is because the method we use for homography estimation (RANSAC) is robust to outliers (in this case, the outliers are the incorrect matches).

RANSAC works by estimating a model from a minimal sample (in this case, four matches needed to compute the homography), and then refining the estimation by fitting to the other data points. Many minimal samples are tested, the one with the most “support” from the other data points is then deemed the winner. The idea is that the true estimation will win out, even if there are many outliers because the outliers should not be consistent with any other data points.

(H, mask) = cv2.findHomography(ptsA, ptsB, method=cv2.RANSAC, ransacReprojThreshold=5)

Notice how the RANSAC algorithm is able to successfully filter out most of the incorrect matches! Only the inlier data points (the green matches in the image) are used for the homography estimation.

Now all that’s left to do is to use the estimated homography to warp the input image and align it to the template.

(h, w) = template.shape[:2]

aligned = cv2.warpPerspective(image, H, (w, h))

The output is the aligned image!

Continue reading Part 2 to learn how to automatically verify that the aligned image is a vaccination card.

References

[1]: Hartley, Richard, and Andrew Zisserman. Multiple View Geometry in Computer Vision. 2nd ed, Cambridge University Press, 2003.

[2]: Rublee, Ethan, et al. “ORB: An efficient alternative to SIFT or SURF.” 2011 International conference on computer vision. Ieee, 2011.